Hello! I am a Canadian software developer from from Southern Alberta. I graduated from the University of Waterloo

with a MASc in systems design engineering and from the University of Lethbridge with a

BSc in computer science.

I currently live in Toronto, Ontario and work as

a software developer at IBM.

Personal Projects / Research

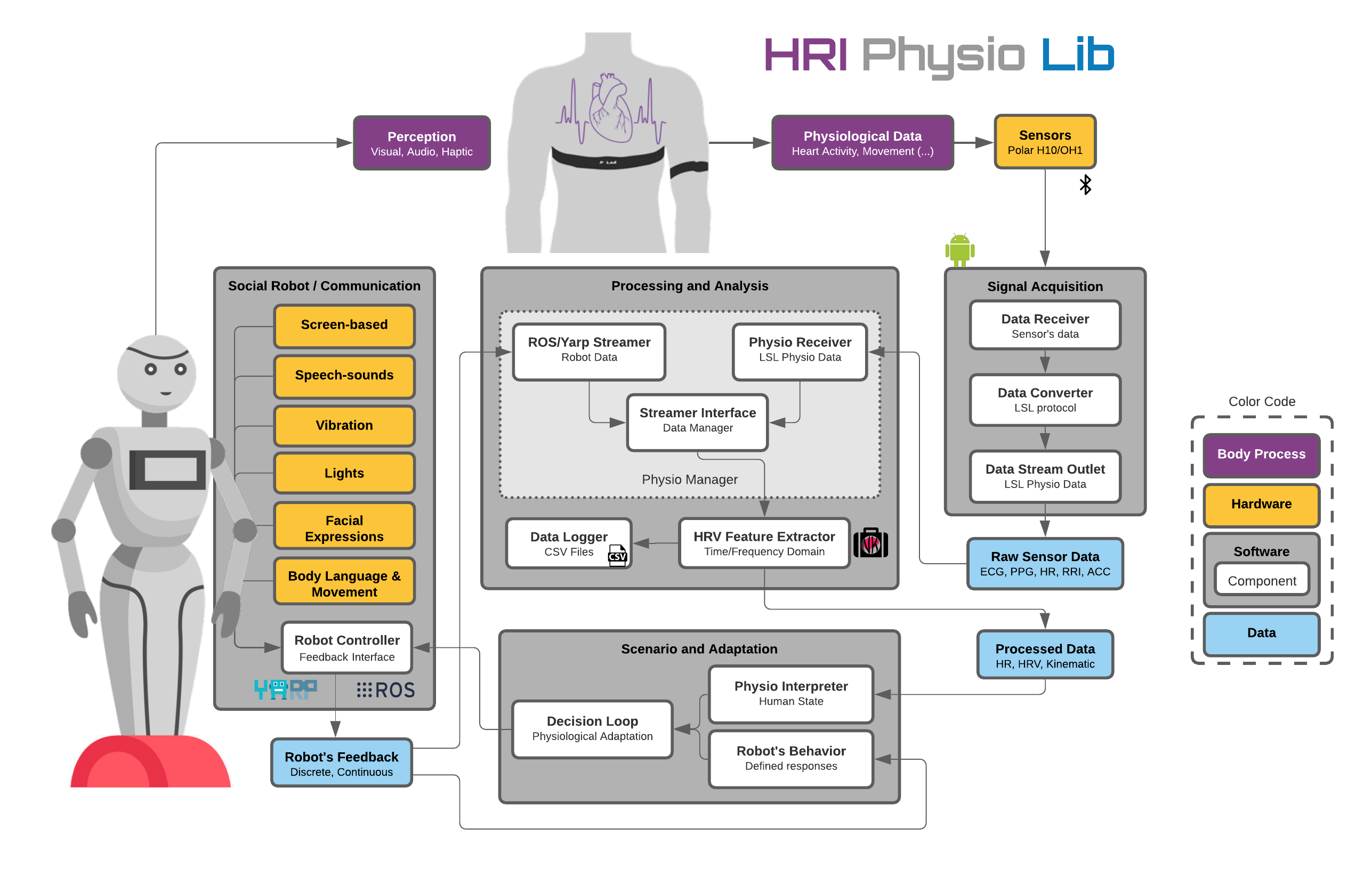

Accessible Integration of Physiological Adaptation in Human-Robot Interaction

My MASc thesis focused on the

integration of physiological adaptation in human-robot interaction. I developed a conceptual

framework for providing physiological data to robotic systems for real-time adaptation. Due to the

COVID-19 pandemic, human participant research was restricted, which led me to implement a simple

proof of concept scenario involving a physiologically-aware robotic exercise coach.

The source code can be found here .

High-level architecture of the HRI Physio Lib, providing a road map for the integration of

physiological data into robotics frameworks.

High-level architecture of the HRI Physio Lib, providing a road map for the integration of

physiological data into robotics frameworks.

The physiologically aware robotic exercise coach, QT would begin the scenario with a

calibration phase to determine the participant's resting heart rate.

The physiologically aware robotic exercise coach, QT would begin the scenario with a

calibration phase to determine the participant's resting heart rate.

The QT robot would lead the participant through a variety of cardio activities with an

aerobic step platform with the goal to maintain their heart rate within the prescribed zone.

The QT robot would lead the participant through a variety of cardio activities with an

aerobic step platform with the goal to maintain their heart rate within the prescribed zone.

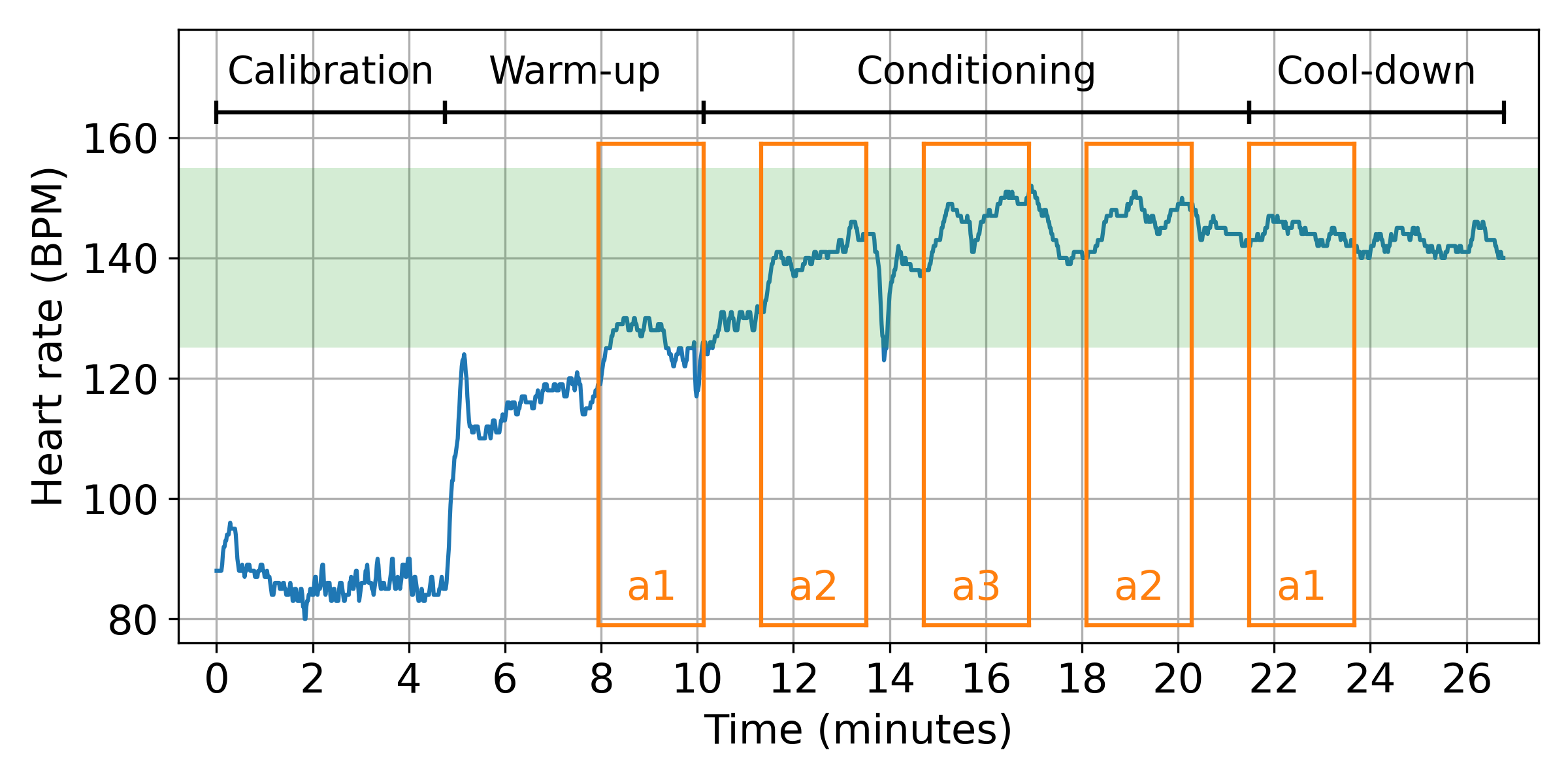

Change in heart rate while exercising with the QT Cardio-Aware Coach. The orange boxes show

the time when different activities occurred. Throughout the scenario the robot would

increase or decrease the pace of the activity when the participant's heart rate was outside

of the target zone.

Change in heart rate while exercising with the QT Cardio-Aware Coach. The orange boxes show

the time when different activities occurred. Throughout the scenario the robot would

increase or decrease the pace of the activity when the participant's heart rate was outside

of the target zone.

The participant would perform a variety of exercises with the QT robot. The screen on the table

would show a looping video of a person performing the exercise, while the QT robot mimicked

similar motions with its arms. The pace of the video and the robot's movements would be adjusted

along with the music to keep the participant's heart rate within the target zone.

The participant would perform a variety of exercises with the QT robot. The screen on the table

would show a looping video of a person performing the exercise, while the QT robot mimicked

similar motions with its arms. The pace of the video and the robot's movements would be adjusted

along with the music to keep the participant's heart rate within the target zone.

Related publications:

Embodied Intelligence

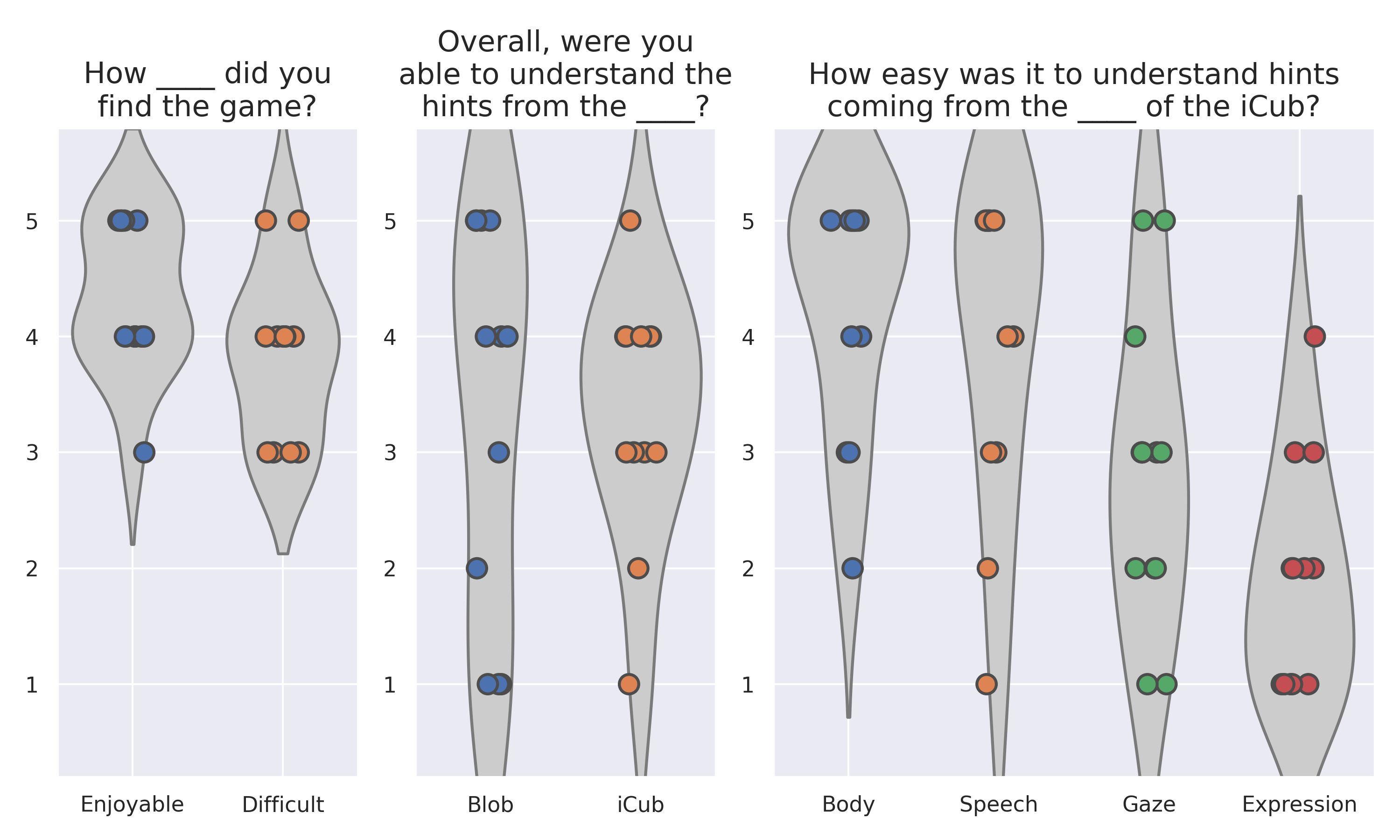

As a course project I developed a scenario in which a humanoid robot would collaborate and provide

hints for a modified variation of the Towers of Hanoi game. The purpose of this scenario was to test

preference in the robot's embodied communication style. Embodiment within this context refers to the

capacity for an agent to perturb its environment. For this scenario I identified four perturbatory

channels that a humanoid robot could use in communication with a person, being gaze, gesture,

speech, and emotional expression.

For the project, I ran a mock user study with 12 participants to determine the effectiveness of the

different communication styles.

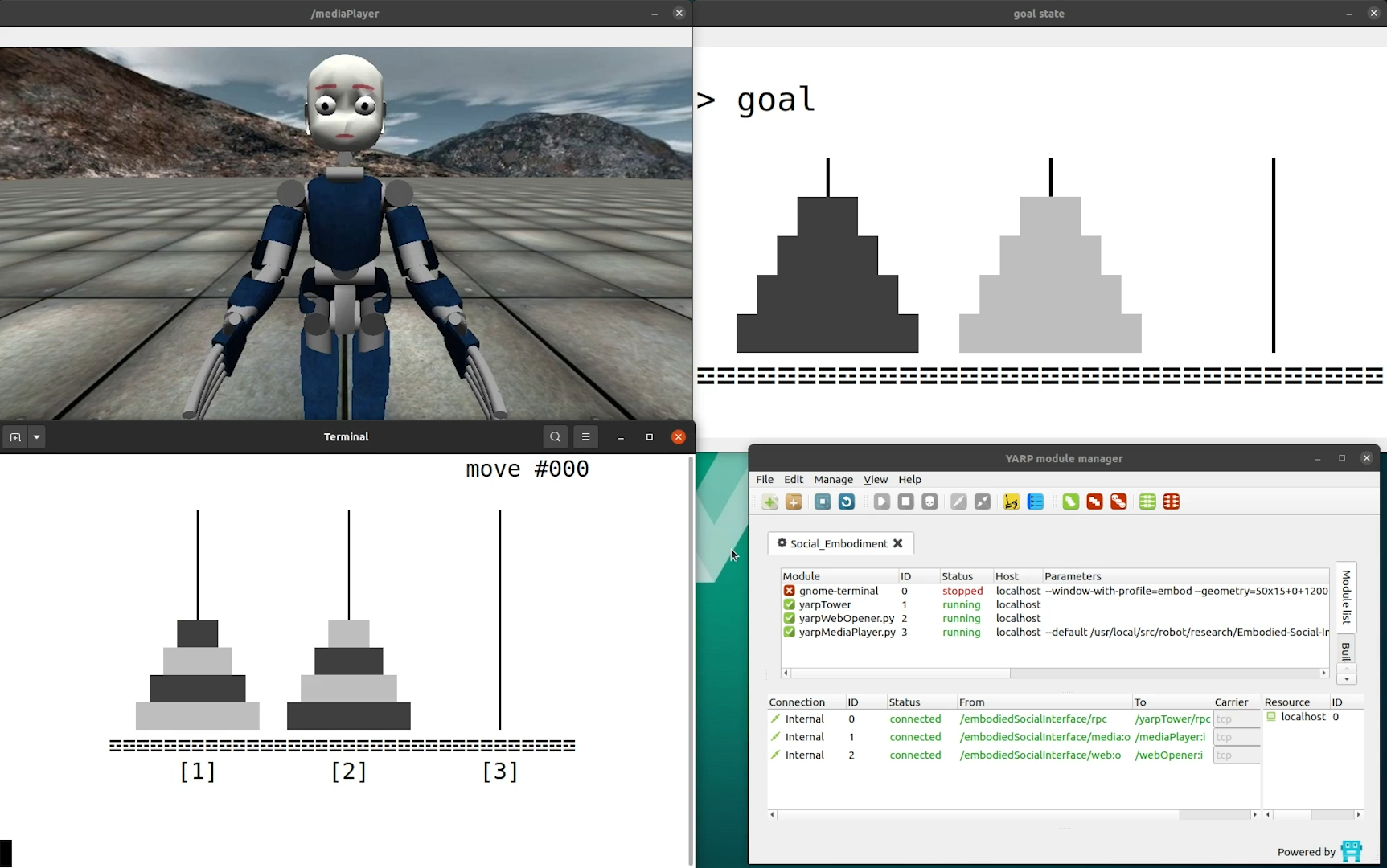

The game interface for the bicolor Towers of Hanoi game had a simulated version of the iCub

humanoid robot above the game board. The robot would cycle through different communication

styles to provide hints on the next best move. Below, a simple ASCII representation of the

game board would show the current state of the game.

The game interface for the bicolor Towers of Hanoi game had a simulated version of the iCub

humanoid robot above the game board. The robot would cycle through different communication

styles to provide hints on the next best move. Below, a simple ASCII representation of the

game board would show the current state of the game.

Collected subjective preference from the participants for the different communication styles

found a preference towards the robots gestures and speech.

Collected subjective preference from the participants for the different communication styles

found a preference towards the robots gestures and speech.

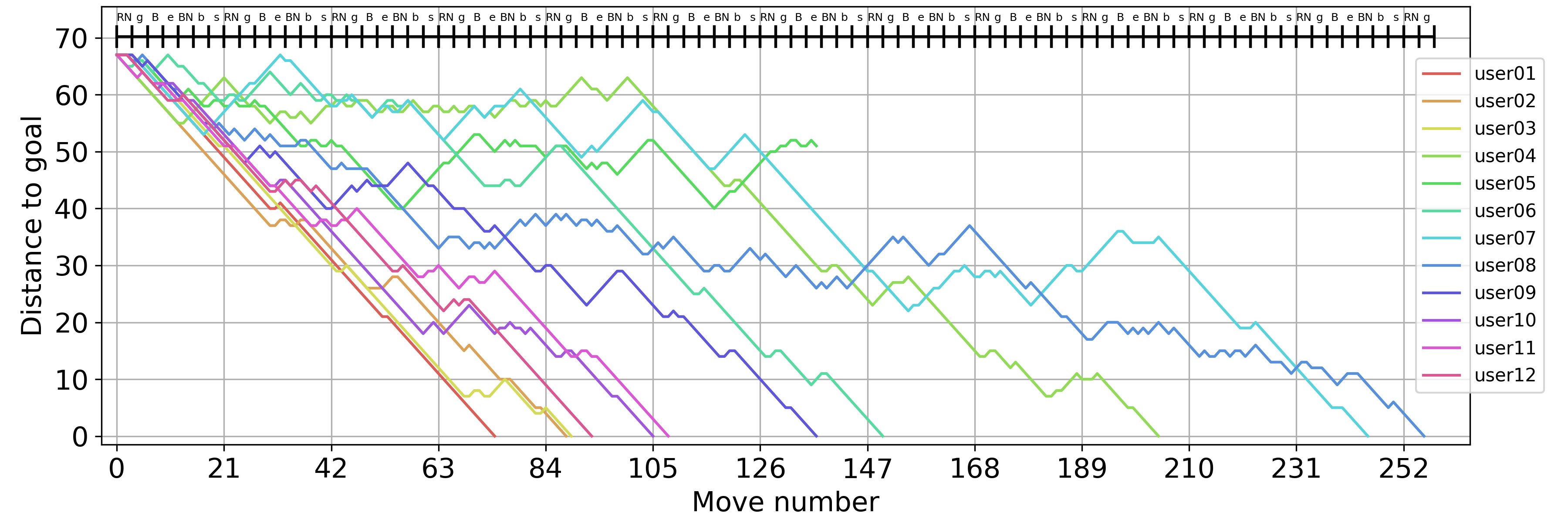

The distance for each participant from the goal state. An optimal solution to the three-peg and

four-disk configuration used required 67 moves.

The distance for each participant from the goal state. An optimal solution to the three-peg and

four-disk configuration used required 67 moves.

I published an early draft of this work at the HRI 2022 workshop on Joint Action, Adaptation, and Entrainment in Human-Robot Interaction (JAAE).

Audio Attention

During my undergraduate studies I worked on a project that involved the development of a

biologically inspired audio attention system for the iCub humanoid robot.

The system was designed to mimic the human auditory system by using a binaural microphone setup to

localize sound sources in the environment. The system would then use the envelope of the sound

signal to determine the saliency of the sound source. The system was tested in a variety of noisy

environments to determine its robustness to noise.

The source code can be found here .

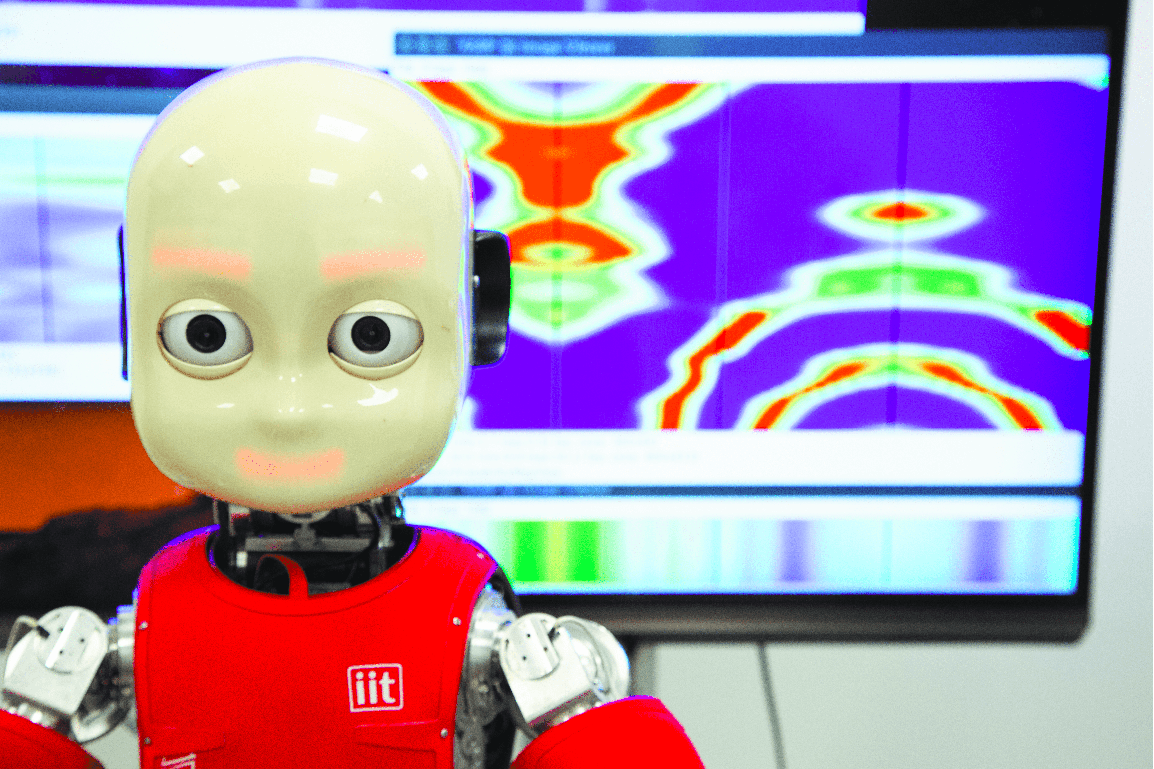

The iCub, Reddy at the Italian Institute of Technology in Genoa, Italy. The robot has two

microphones for ears which introduces problems of spatial ambiguity when localizing sound

sources.

The iCub, Reddy at the Italian Institute of Technology in Genoa, Italy. The robot has two

microphones for ears which introduces problems of spatial ambiguity when localizing sound

sources.

The head of an iCub at the University of Lethbridge, surrounded by an array of speakers in a

noise dampening room.

The head of an iCub at the University of Lethbridge, surrounded by an array of speakers in a

noise dampening room.

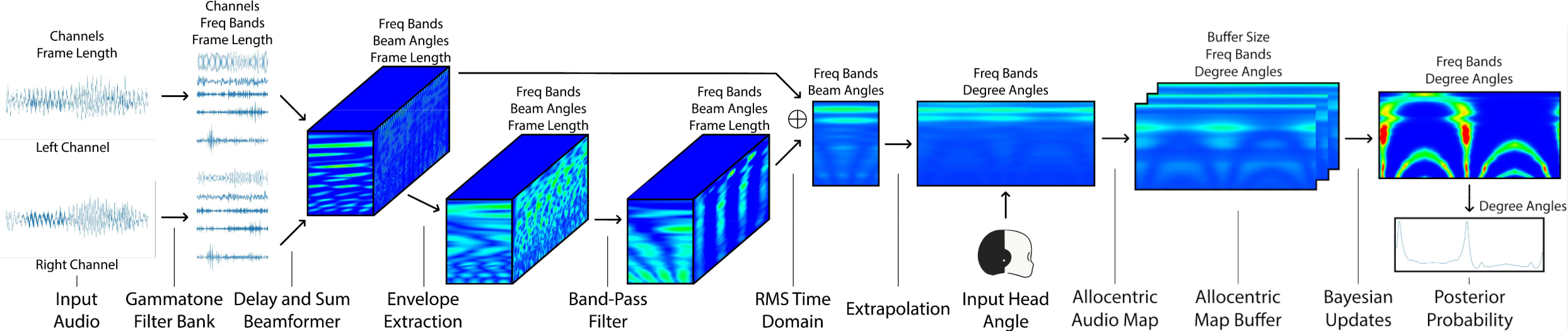

The complete audio attention pipeline. Audio is captured from the left and right microphones.

These channels are passed to a gammatone filter bank that decomposes the audio signals into

their spectral components. The left and right channel are then artificially phased forwards and

backwards in time with a delay and sum beamformer to simulate different time of arrivals. A

Hilbert transformation is made on the beamformed signals to compute the envelope and then a band

pass filter is used to extract the energy of 4 Hz envelopes. The time domain is collapsed by

taking the root mean square of the envelope energy to create an evidence map which represents to

belief of energy in the 180 degree field of view in front of the robot. We mirror this to the

back field of view to create a 360 degree egocentric field of view and offset it based on the

input head angle to make it allocentric. These maps are buffered and Bayesian updates are

made to create a belief of where sound sources are in the environment.

The complete audio attention pipeline. Audio is captured from the left and right microphones.

These channels are passed to a gammatone filter bank that decomposes the audio signals into

their spectral components. The left and right channel are then artificially phased forwards and

backwards in time with a delay and sum beamformer to simulate different time of arrivals. A

Hilbert transformation is made on the beamformed signals to compute the envelope and then a band

pass filter is used to extract the energy of 4 Hz envelopes. The time domain is collapsed by

taking the root mean square of the envelope energy to create an evidence map which represents to

belief of energy in the 180 degree field of view in front of the robot. We mirror this to the

back field of view to create a 360 degree egocentric field of view and offset it based on the

input head angle to make it allocentric. These maps are buffered and Bayesian updates are

made to create a belief of where sound sources are in the environment.

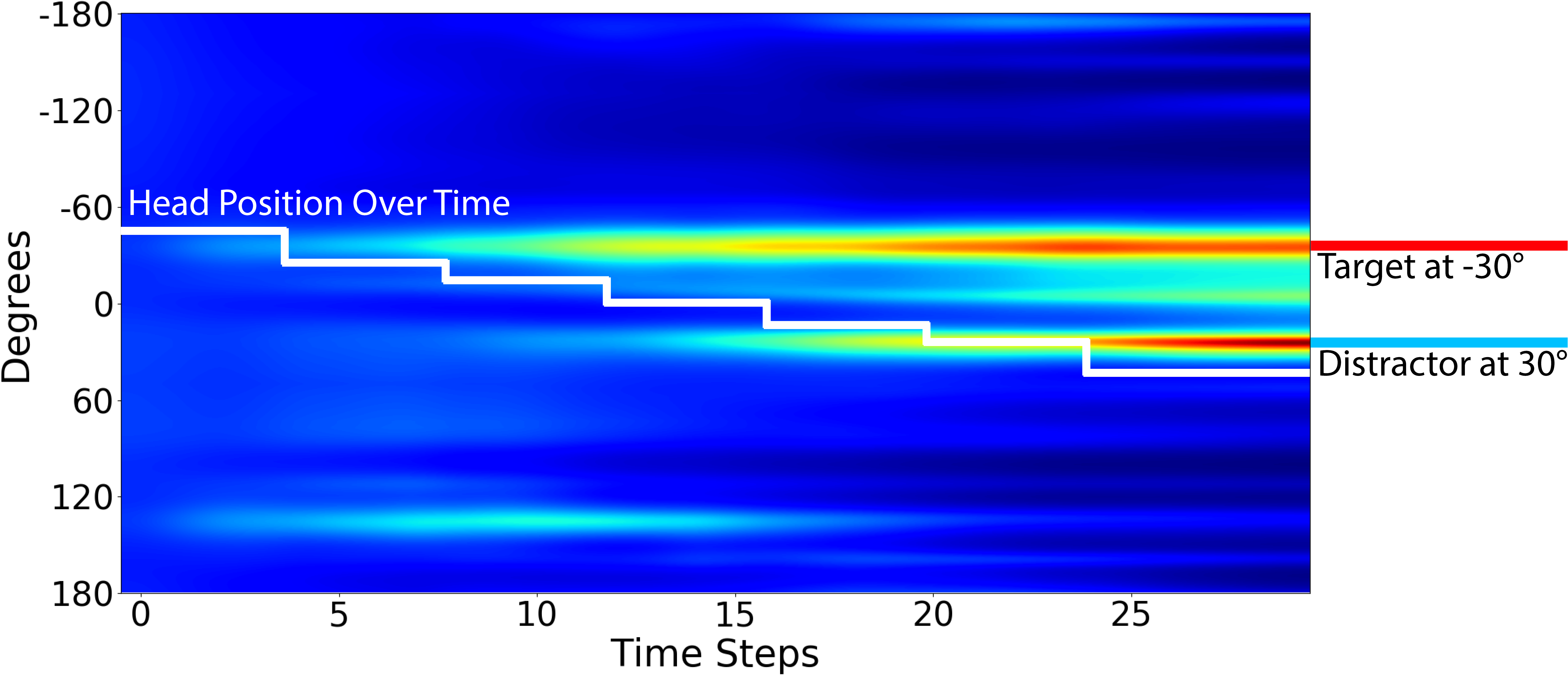

The belief of sound sources in the environment over time using an amplitude only approach.

As the head moves, the model can solve the front-back ambiguity problem but can't

distinguish between the target speech and a noisy distraction.

The belief of sound sources in the environment over time using an amplitude only approach.

As the head moves, the model can solve the front-back ambiguity problem but can't

distinguish between the target speech and a noisy distraction.

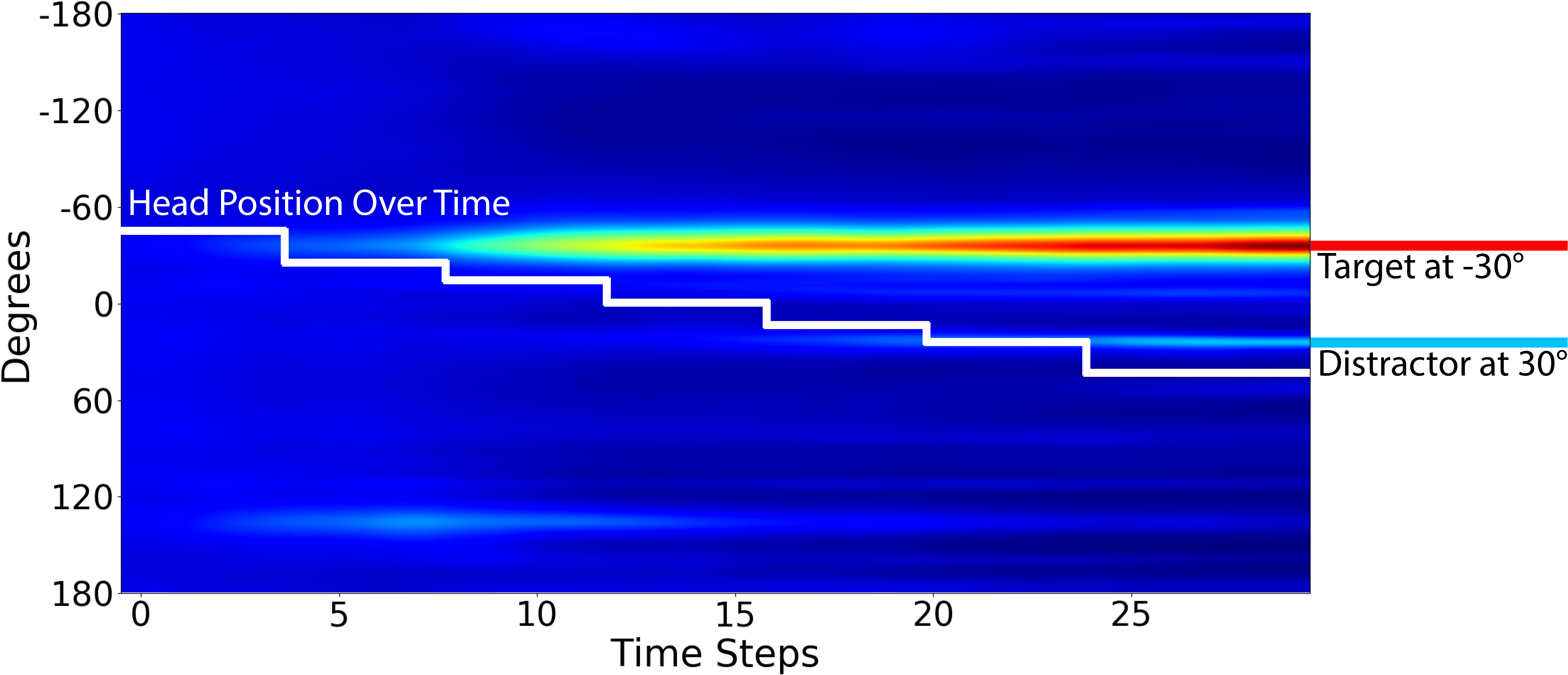

By applying the envelope filtering technique, the model builds a higher belief of where the

human speech is located in the environment. The distraction will still ultimately be

present, however, the saliency of the human speech is much higher.

By applying the envelope filtering technique, the model builds a higher belief of where the

human speech is located in the environment. The distraction will still ultimately be

present, however, the saliency of the human speech is much higher.

Related publications:

iCub Social Engineering

I collaborated on a project with an international colleague which aimed to explore the idea of if a robot can be used to help divert a person away from a variety of social engineering attacks. For this study we utilized the FurHat robot platform to interact with participants in a remote setting.

Experimental session with the experimental laptop (left) controlled by the participant

(right) via the Zoom platform.

Experimental session with the experimental laptop (left) controlled by the participant

(right) via the Zoom platform.

We published this work in the ACM/IEEE Human-Robot Interaction conference.

iCub Treasure Hunt

I collaborated on a project with international colleagues which aimed to explore the idea of using an unreliable robot to assist in a treasure hunt game. The study involved the iCub robot providing hints to the participant on request, however, the robot would periodically malfunction. The goal of the study was to determine how failures of a robot in interaction would deteriorate the quality of the interaction.

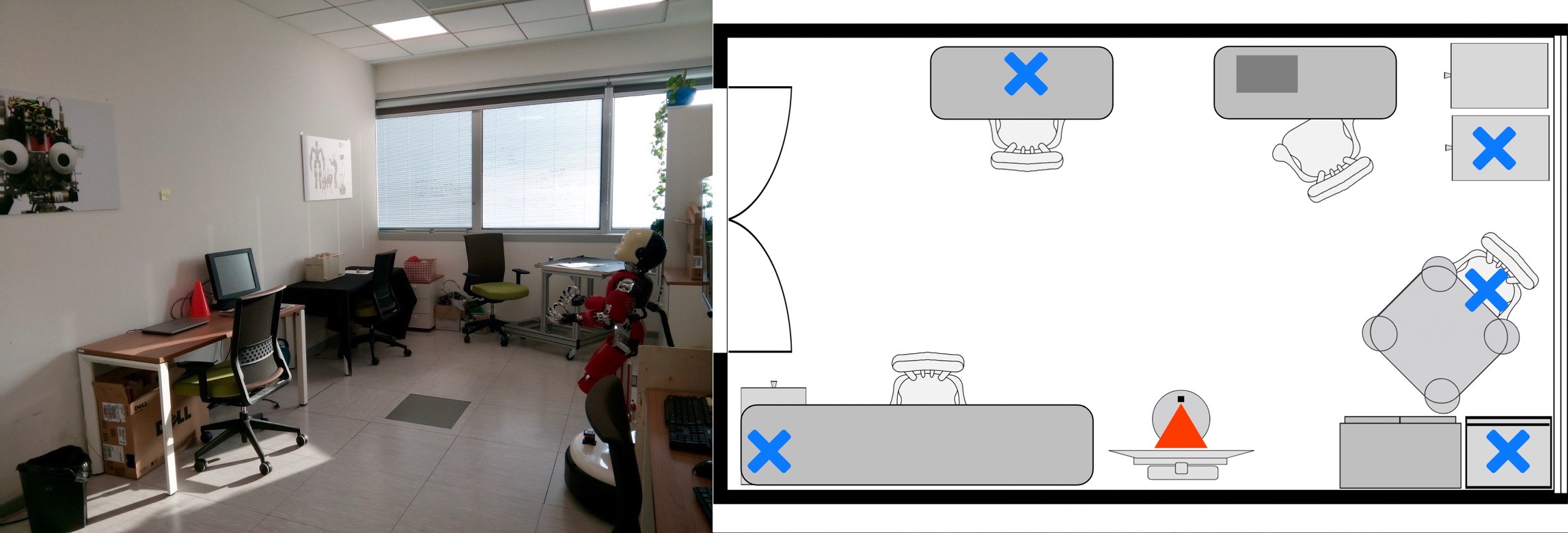

Experimental room (left). Schematic layout of the room locating the hidden objects and the

robots position (right).

Experimental room (left). Schematic layout of the room locating the hidden objects and the

robots position (right).

We published this work in the IEEE Robotics and Automation Letters.

Hobbies & Other Activities

Rock Climbing

Distance Running

Cycling

Urban Photography

Coffee

Pet Parent